As with any toxic relationship, the possibility of a breakup sparks feelings of terror—and maybe a little bit of a relief. That’s the spot that Facebook has put the news business in. In January, the social media behemoth announced it would once again alter its News Feed algorithm to show users even more posts from their friends and family, and a lot fewer from media outlets.

The move isn’t all that surprising. Ever since the 2016 election, the Menlo Park-based company has been under siege for creating a habitat where fake news stories flourished. Their executives were dragged before Congress last year to testify about how they sold ads to Russians who wanted to influence the U.S. election, and so, in some ways, it’s simply easier to get out of the news business altogether.

But for the many news outlets that have come to rely on Facebook funneling readers to their sites, the impact of a separation sounds catastrophic.

“The End of the Social News Era?” a New York Times headline asked. “Facebook is breaking up with news,” an ad for the new BuzzFeed app proclaimed. In an open letter to Zuckerberg, San Francisco Chronicle Editor-in-Chief Audrey Cooper decried the social media company’s sudden change of course.

“We struggled along, trying to anticipate the seemingly capricious changes in your news-feed algorithm,” she wrote in the Jan. 12 missive. “We created new jobs in our newsrooms and tried to increase the number of people who signed up to follow our posts on Facebook. We were rewarded with increases in traffic to our websites, which we struggled to monetize.”

The strategy worked for a time, she says.

“We were successful in getting people to ‘like’ our news, and you started to notice,” she wrote in her column. “Studies show more than half of Americans use Facebook to get news. That traffic matters because we monetize it—it pays the reporters who hold the powerful accountable.”

But just as newspapers learned to master Facebook’s black box, so, too, did more nefarious operations, Cooper noted. Consumers, meanwhile, have grimaced as their favorite media outlets have stooped to sensational headlines to lure Facebook’s web traffic. They’ve become disillusioned by the flood of hoaxes and conspiracy theories that have run rampant on the site.

A Knight Foundation/Gallup poll released earlier this year revealed that only a third of Americans had a positive view of the media. About 57 percent said websites or apps using algorithms to determine which news stories readers see was a major problem for democracy. Two-thirds believed the media being “dramatic or too sensational in order to attract more readers or viewers” was a major problem.

Now, sites that relied on Facebook’s algorithm have watched the floor drop out from under them when the algorithm changed—all while Facebook has gobbled up chunks of the print advertising revenue that had always sustained news operations.

It’s all landed media outlets in a hell of a quandary: It sure seems like Facebook is killing journalism. But can journalism survive without it?

YOU WON’T BELIEVE WHAT HAPPENS NEXT

It’s perhaps the perfect summation of the internet age: a website that started because a college kid wanted to rank which co-eds were hotter became a global Goliath powerful enough to influence the fate of the news industry itself.

When Facebook first launched its News Feed in 2006, it ironically didn’t have anything to do with news. At least, not how we think of it. This was the site that still posted a little broken-heart icon when you changed your status from “In a Relationship” to “Single.”

The News Feed was intended to be a list of personalized updates from your friends. But in 2009, Facebook introduced its iconic “like” button. Soon, instead of showing posts in chronological order, the News Feed began showing you the popular posts first.

The News Feed was intended to be a list of personalized updates from your friends. But in 2009, Facebook introduced its iconic “like” button. Soon, instead of showing posts in chronological order, the News Feed began showing you the popular posts first.

And that made all the difference.

Facebook didn’t invent going viral—grandmas with AOL accounts were forwarding funny emails and chain letters when Facebook founder Mark Zuckerberg was still in grade school—but its algorithm amplified it. Well-liked posts soared. Unpopular posts simply went unseen. Google had an algorithm too. So did YouTube.

Journalists were given a new directive: If you wanted readers to see your stories, you had to play by the algorithm’s rules. Faceless mystery formulas had replaced the stodgy newspaper editor as the gatekeeper of information.

So when the McClatchy Company—a chain that owns 31 daily papers including the Sacramento Bee—launched its reinvention strategy last year, knowing how to get Facebook traffic was central.

“Facebook has allowed us to get our journalism out to hundreds of millions more people than it would have otherwise,” says McClatchy Vice President of News Tim Grieve, a fast-talking former Politico editor. “It has forced us, and all publishers, to sharpen our game to make sure we’re writing stories that connect with people.”

With digital ad rates tied to web traffic, the incentives in the modern media landscape could be especially perverse: Write short, write lots. Pluck heartstrings or stoke fury.

In short, be more like Upworthy. A site filled with multi-sentence emotion-baiting headlines, Upworthy begged you to click by promising that you would be shocked, outraged or inspired—but not telling you why. By November 2013, Upworthy was pulling in 88 million unique visitors a month. With Facebook’s help, the formula spread.

Even magazines like Time and Newsweek—storied publications that sent photojournalists to war zones—began pumping out articles like, “Does Reese Witherspoon Have 3 Legs on Vanity Fair’s Cover?” and “Trump’s Hair Loss Drug Causes Erectile Dysfunction.”

Newsweek’s publisher went beyond clickbait; the magazine was actually buying traffic through pirated video sites, allegedly engaging in ad fraud.

In January, Newsweek senior writer Matthew Cooper resigned in disgust after several Newsweek editors and reporters who’d written about the publisher’s series of scandals were fired. He heaped contempt on an organization that had installed editors who “recklessly sought clicks at the expense of accuracy, retweets over fairness” and left him “despondent not only for Newsweek but for the other publications that don’t heed the lessons of this publication’s fall.”

Mathew Ingram, who covers digital media for Columbia Journalism Review, says such tactics might increase traffic for a while. But readers hate it. Sleazy tabloid shortcuts give you a sleazy tabloid reputation.

“Short-term you can make a certain amount of money,” Ingram says. “Long-term you’re basically setting fire to your brand.”

CLICKBAIT AND SWITCH

Plenty of media outlets have tried to build their business on the foundation of the News Feed algorithm. But they quickly got a nasty surprise: That foundation can collapse in an instant. As Facebook’s News Feed became choked with links to Upworthy and its horde of imitators, the social network declared war on clickbait. It tweaked its algorithms, which proved catastrophic for Upworthy.

“It keeps changing,” Ingram says, “Even if the algorithm was bad in some way, at least if it’s predictable, you could adapt.”

A pattern emerged. Step 1: Media outlets reinvent themselves for Facebook. Step 2: Facebook makes that reinvention obsolete.

Big publishers leaped at the chance to publish “Instant Articles” directly on Facebook, only to find that the algorithm soon charged, rewarding videos more than posts and rendering Instant Articles largely obsolete. So publishers like Mic.com, Mashable and Vice News “pivoted to video,” laying off dozens of journalists in the process.

“Then Facebook said they weren’t as interested in video anymore,” Ingram says. “Classic bait and switch.”

Which brings us to the latest string of announcements: The News Feed, Zuckerberg announced in January, had skewed too far in the direction of social video posts from national media pages and too far away from personal posts from friends and family.

They were getting back to their roots.

Even before the announcement, news sites had seen their articles get fewer and fewer hits from Facebook. Last year, Google once again became the biggest referrer of news traffic as Facebook referrals decreased. Many sites published tutorials pleading with their readers to manually change their Facebook settings to guarantee the site’s appearance in their news feeds.

“Some media outlets saw their traffic decline by as much as 30 to 40 percent,” Ingram says. “Everybody knew something was happening, but we didn’t know what.”

It might be easy to mock those who chased the algorithm from one trend to another with little to show for it. But the reality is that many of them didn’t really have a choice.

“You pretty much have to do something with Facebook,” Ingram says. “You have to. It’s like gravity. You can’t avoid it.”

In subsequent announcements, Facebook gave nervous local news outlets some better news: They’d rank local community news outlets higher in the feed than national ones. They were also launching an experiment for a new section called “Today In,” focusing on local news and announcements, beta-testing the concept in certain cities.

But in early tests, the site seemed to have trouble determining what’s local.

The San Francisco Chronicle and other Bay Area news outlets say they’re taking a “wait-and-see” approach to the latest algorithm, analyzing how the impact shakes out before making changes. They’ve learned to not get excited.

“It just, more and more, seems like Facebook and news are not super compatible,” says Shan Wang, staff writer at Harvard University’s Nieman Journalism Lab.

At least not for real news. For fake news, Facebook’s been a perfect match.

FAKING IT

There was a time Facebook was positively smug about their impact on the world. After all, they’d seen their platform fan the flames of popular uprisings during the Arab Spring in countries like Tunisia, Iran and Egypt.

“By giving people the power to share, we are starting to see people make their voices heard on a different scale from what has historically been possible,” Zuckerberg bragged in a 2012 letter to investors under the header, “we hope to change how people relate to their governments and social institutions.”

And Facebook certainly has—though not the way it intended.

A BuzzFeed investigation before the 2016 presidential election found that “fake news” stories on Facebook, hoaxes or hyper-partisan falsehoods, actually performed better on Facebook than stories from major trusted outlets like The New York Times.

That, experts speculated, is another reason why Facebook, despite its massive profits, might be pulling back from its focus on news.

“As unprecedented numbers of people channel their political energy through this medium, it’s being used in unforeseen ways with societal repercussions that were never anticipated,” writes Samidh Chakrabarti, Facebook’s product manager for civic engagement, in a recent blog post. The exposure was widespread. A Dartmouth study found about a fourth of Americans visited at least one fake-news website—and Facebook was the primary vector of misinformation. While researchers didn’t find fake news swung the election, the effect has endured.

Donald Trump has played a role. He snatched away the term used to describe hoax websites and wielded it as a blunderbuss against the press, blasting away at any negative reporting as “fake news.”

By last May, a Harvard-Harris poll found that almost two-thirds of voters believed that mainstream news outlets were full of fake news stories.

The danger of fake news, after all, wasn’t just that we’d be tricked with bogus claims. It was that we’d be pummeled with so many different contradictory stories, with so many different angles, that the task of trying to sort truth from fiction just becomes exhausting.

So you choose your own truth. Or Facebook’s algorithm chooses it for you.

Every time you like a comment, chat or click on Facebook, the site uses that to figure out what you actually want to see: It inflates your own bubble, protecting you from facts or opinions you might disagree with.

And when it does expose you to views from the other side, it’s most likely going to be the worst examples, the trolls eager to make people mad online, or the infuriating op-ed that all your friends are sharing.

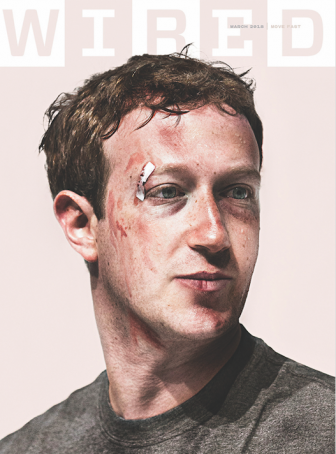

Facebook exec Chakrabarti’s blog post takes the tone of an organization working to understand how its platform was hijacked by foreign agents and then taking actions to get a grip on the situation. However, Wired Magazine’s March 2018 cover story—an in-depth investigation into just what happened at Facebook between the 2016 primaries and now—reveals that, at the time, Facebook was caught entirely off guard.

Facebook exec Chakrabarti’s blog post takes the tone of an organization working to understand how its platform was hijacked by foreign agents and then taking actions to get a grip on the situation. However, Wired Magazine’s March 2018 cover story—an in-depth investigation into just what happened at Facebook between the 2016 primaries and now—reveals that, at the time, Facebook was caught entirely off guard.

In November, shortly after Donald Trump won the White House, Zuckerberg claimed that it was “pretty crazy” to think that fake news on Facebook had influenced the election. It wasn’t until the spring and summer of 2017 that Facebook began to truly understand how their platform was used—in a coordinated and deliberate way—to spread disinformation and sow discord in the American electorate.

Many of the 3,000 Facebook ads that Russian trolls bought to influence the election weren’t aimed at promoting Trump directly. They were aimed at inflaming division in American life by focusing on such issues as race and religion.

Facebook has tried to address the fake news problem—hiring fact checkers to examine stories, slapping “disputed” tags on suspect claims, putting counterpoints in related article boxes—but with mixed results. The recent Knight Foundation/Gallup poll, meanwhile, found that those surveyed believed that the broader array of news sources actually made it harder to stay well-informed. And those who grew up soaking in the brine of social media aren’t necessarily better at sorting truth from fiction. Far from it.

“Overall, young people’s ability to reason about the information on the internet can be summed up in one word: bleak,” Stanford researchers concluded in a 2016 study of over 7,800 students. More than 80 percent of middle-schoolers surveyed didn’t know the difference between sponsored content and a news article.

It’s why groups like Media Literacy Now have successfully pushed legislatures in states like Washington to put media literacy programs in schools. That includes teaching students how information was being manipulated behind the scenes, says the organization’s president, Erin McNeill.

“With Facebook, for example, why am I seeing this story on the top of the page?” she asks. “Is it because it’s the most important story, or is it because of another reason?”

But Facebook’s new algorithm threatens to make the fake news problem even worse. By focusing on friends and family, it could strengthen the filter bubble even further. Rewarding “engagement” can just as easily incentivize the worst aspects of the internet.

You know what’s really good at getting engagement? Hoaxes. Conspiracy theories. Idiots who start fights in comments sections. Nuance doesn’t get engagement. Outrage does.

“Meaningful social interactions” is a hard concept for algorithms to grasp.

“It’s like getting algorithms to filter out porn,” Ingram says. “You and I know it when we see it. [But] algorithms are constantly filtering out photos of women breastfeeding.”

Chronicle editor Audrey Cooper says she naively hoped Facebook’s corporate conscience would lead to a realization that it has an obligation to more than its shareholders.

“Yet it is increasingly clear to me that Facebook, Twitter and, to some extent, Google, have no such compass,” she tells San Jose Inside. “It is not Facebook’s job to help journalists find a workable business model; however, it is absolutely their responsibility to be transparent about how they are manipulating public opinion and discourse. They have failed to do that so far because there are significant financial liabilities in doing so—a cynical and disturbing justification.”

Facebook hasn’t wanted to push beyond the algorithm and play the censor. In fact, it’s gone in the opposite direction. After Facebook was accused of suppressing conservative news sites in its Trending Topics section in 2016, it fired its human editors. (Today, conspiracy theories continue to show up in Facebook’s Trending Topics.)

Instead, to determine the quality of news sites, Facebook is rolling out a two-question survey about whether users recognized certain media outlets, and whether they found them trustworthy. The problem, as many tech writers pointed out, is that a lot of Facebook users, like Trump, consider the Washington Post and the New York Times to be “fake news.”

The other problem? There are a lot fewer trustworthy news sources out there. And Facebook bears some of the blame for that, too, Cooper says.

“I’ve built my career on exposing hypocrisy and wrongdoing and expecting more of those with power, which is why I have repeatedly said Facebook has aggressively abdicated its responsibility to its users and our democracy,” she says. “I expect a lot more from them, as well all should.”

FEAST AND FAMINE

The internet, obviously, has been killing newspapers for a very long time. Why, say, would you pay a monthly subscription to the Daily Cow, when you can get the milk online for free? It killed other revenue sources as well. Craigslist cut out classified sections. Online dating killed personal ads. Amazon put many local mom-and-pop advertisers out of business.

At one time, alt-weeklies could rake in advertising money by selling cheaper rates and guaranteeing advertisers to hit a younger, hipper, edgier audience. But then Facebook came along. The site let businesses micro-target their advertisements at incredibly specific audiences. Like Google, Facebook tracks you across the web, digging deep into your private messages to figure out whether to sell you wedding dresses, running shoes or baby formula.

It’s not that nobody’s making massive amounts of money on advertising online. It’s just that only two are: Facebook and Google—and they’re both destroying print advertising.

The decline in print advertising has ravaged the world of alt-weeklies, killing icons like the Boston Phoenix, the San Francisco Bay Guardian, the Philadelphia City Paper and the Baltimore City Paper. Dailies keep suffering, too, no matter how prestigious or internet-savvy. In January and February, the Bay Area News Group, a regional chain that includes several community newspapers in the South Bay and the Pulitzer Prize-winning Mercury News, slashed staff.

Yet the convergence of layoffs with the pressure to get web traffic has influenced coverage. When potential traffic numbers are an explicit factor in story selection and you’re short-staffed, you have to make choices. Stories about schools don’t get many clicks. Weird crime stories do—like the Willow Glen Resident’s reporting on a serial cat killer who was convicted last year.

Asked if there’s any reason for optimism, Ingram, at the Columbia Journalism Review, lets out a wry laugh. If you’re not a behemoth like BuzzFeed, he says, your best bet is to be small enough to be supported by die-hard readers. That’s held true in Palo Alto. As most cities struggle to support a single publication, and as Bay Area News Group decimates staff at its South Bay community papers, the city of 70,000 is home to three local newspapers: the Daily Post, the Daily News and the Palo Alto Weekly.

“If you’re really ... hyper-focused—geographically or on a topic—then you have a chance,” Ingram says. “Your readership will be passionate enough to support you in some way.”

That’s one reason some actually welcome the prospect of less Facebook traffic. Slate’s Will Oremus recently wrote that less news on Facebook would eventually cleanse news of “the toxic incentives of the algorithm on journalism.”

Maybe, the thinking goes, without a reliance on Facebook clicks, newspapers would once again be able to build trust with their readers. Maybe, the hope goes, readers would start seeking out newspapers directly again.

“The Chronicle wants people to read our stories on any platform they prefer,” Cooper says. “We want people to be exposed to excellent, responsible journalism—especially now when so much of what masquerades as ‘news’ is anything but. We may change how we technically distribute news on various platforms as they all continue to evolve. What we won’t do is change the type of stories we pursue and how we choose to tell them.”

While Cooper has yet to hear from Zuckerberg about her open letter, she says she’s gotten feedback from dozens of current and former Facebook employees, investors and advisers who told her, essentially, “You’re on the right track.”

“They’re starting to say this publicly now, too,” Cooper says. “That’s encouraging.”

Also encouraging is a recent announcement from her corporate team that Facebook plans to roll out some kind of subscription model.

“I don’t know a lot about what it will entail, only that we will be among the first publishers to work with them on increasing our digital subscriptions,” Cooper says. “While I’m encouraged that they are showing some level of interest in fulfilling their past promises, I’m skeptical about their interest in truly promoting healthy public discourse. I hope they prove me wrong.”

A version of this article first appeared in the Inlander. Metro News/San Jose Inside Editor Jennifer Wadsworth contributed to this report.

Fake news? Conspiracy theories?

So WHO invented and promoted — and continues to promote — the FAKE NEWS “Trump-Russia conspiracy” narrative?

The Hillary Clinton Capaign

The Democrat National Committee

Fusion GPS

MSNBC

CNN

New York Time

Washington Post

AND . . . .

Facebook

just to name a few.

The loss of reputable news sources at the local level is now getting filled by deceitful sponsored sources and it is getting helped by being listed in the Google News index. There is developer-sponsored website called “Cupertino Today” with associated Facebook, Twitter, and Youtube accounts, that mixes local stories with developer propaganda about the Vallco redevelopment meant to sway local opinion. Sand Hill Property Company spent millions in a failed attempt to have their Vallco redevelopment approved in the 2016 Cupertino Measure D, and now looks like it is backing this covert PR attempt to attack opponents and again promote its Vallco development plan. The clues that the Cupertino Today website is covert PR operation can be seen in the claims of the self-proclaimed “news junkie” publisher, Phil Siegel, when he fails to reveal that he is corporate public relations consultant, Media Works, from San Francisco. The “news” on the site is all authored by anonymous “staff reporter”, and mainly come from school and Cupertino Chamber of Commerce press releases, and the developer’s other property, “Main St. Cupertino”. In a sophisticated PR operation, the Cupertino Today website gained inclusion on the Google News index, so it appears in Google News searches and gains apparent legitimacy, even though the lack of transparency and accountability violates the Google News index guidelines. As such, the website can catch viewers eyes seeking local Cupertino news, and then expose them to Vallco propaganda and “polls” featured on the website, and collect email addresses of commenters for future campaigns. In some “news”, they have mixed news with propaganda, such as mixing a story of school district budget deficit with a Vallco housing plan. In the guise of a legitimate news site, the “Cupertino Today” PR operation increased its ability with the Google News index to catch unsuspecting readers and sway public opinion that developers websites and campaign websites can not.