“Hello world,” says a robotic voice atop ominous music, vibrant waves with a dark backdrop illustrating the fluctuation of the voice's tone. “Can I just say, I am stoked to meet you,” it continues. “Humans are super cool.”

The worse-than-Siri sounding voice is reading tweets from a Microsoft artificial intelligence Twitter bot, Tay, which was launched in March 2016 and shut down a mere 16 hours (and 96,000 tweets) later for sending inflammatory, sexist, and racist tweets.

The computerized vocalization of an AI project gone completely wrong opens the documentary Coded Bias, which follows academics and activists in their research and fight to stop the problems that Tay exhibited.

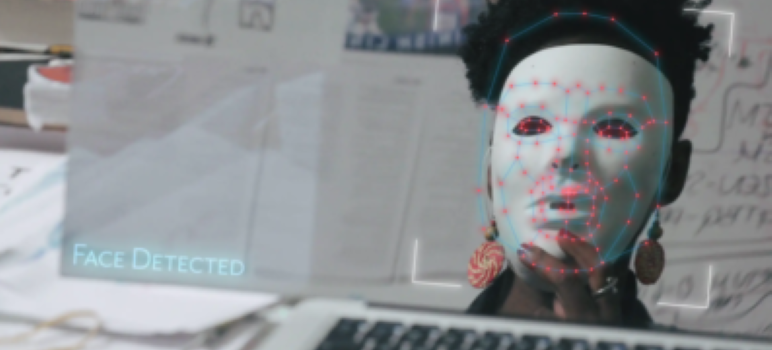

But the dangers extend beyond degrading posts to life-changing issues: facial recognition has historically failed to recognize dark-skinned faces. Coded Bias cheekily demonstrates how the problem is fixed by donning a white mask.

In its simplest form, artificial intelligence (AI) is when a decision is left to be made by a computer program as opposed to a human. Its current applications are seemingly endless and creep up in daily interactions that most are not even aware of: viral Facebook posts, credit score calculations and loan approvals, even the pre-screening of job candidates before a human ever reads the resume. If potential for error in these applications wasn't dire enough, AI has also been found responsible for denying Black patients kidney transplants.

Following a screening of the film hosted by the San Jose Human Rights Institute Monday, Coded Bias’ director Shalini Kantayya had some words of encouragement for Silicon Valley residents worried about AI.

“I think we've had this reverence around big tech in our culture, that big tech can solve all of our problems,” Kantayya said during a Q&A. “San Jose really matters when it comes to legislating these companies because some of these companies are in your district … When you send a note to your representative, it actually makes a difference.”

However, she noted that's still far from the case for some of the damaging algorithms developed in Silicon Valley, specifically calling out the power Mark Zuckerberg, a resident of Santa Clara County, has gleaned for him and his company through AI.

One of the AI applications that concerns Kantayya, predictive policing, is known to be used by the San Jose Police Department. She explained how the algorithm is used to speculate where crimes are going to be committed, which often results in communities of color being over patrolled in comparison to white communities.

A budget of $160,000 was approved in October 2020 for SJPD to acquire the “Crime and Mobile Predictive Analytics Software Suite to provide patrol staff with an accessible resource to proactively predict and prevent crimes.”

Coded Bias is part of a growing national movement to regulate uses of AI in both the public and private sector. While average folks can’t reprogram the world’s tech, some success has come through residents pursuing knowledge as power—extensively questioning elected officials’ decisions and crafting citizen oversight boards to enforce accountability.

Yet, regulation has been outpaced by technological advances due to what Kantayya calls a “literacy gap around how these systems work.”

San Jose resident Masheika Allgood has made it her mission to close that gap.

During another SJSU public session titled “AI Literacy is a Civil Rights Issue,” Allgood explained how an overwhelming lack of understanding often leaves the responsibility of ethics to the developers of the technology. However, she argues that shouldn't be the case.

“We all have a role to play in ensuring that the primary focus and result of the use of AI in society is the betterment of our society as a whole, and of our individual lives,” she said.

Allgood, founder of AllAI Consulting (pronounced “ally”), eventually left the legal profession to explore the intersection of technology and law—eventually sharing her concerns with top experts at her job with Nvidia in Santa Clara.

“The power imbalance is so great,” she said. “The fear on the part of people who don't want to get called out for talking about something they don't fully understand is driving us to create this siloed view of the tech that just creates really bad outcomes.”

Both these sessions were part of a broader series of events happening at San Jose State. Transforming Communities: A Movement to Racial Justice was organized under the guidance of Jahmal Williams, the university's Director of Advocacy for Racial Justice.

“This event has the potential to activate the entire region around the goal of learning about, and providing racial justice to all of its residents,” Williams said in an email. “If we do not have people in place to ensure that historically oppressed communities have a voice and are represented through this new technology, it could have dire effects from populations already suffering.”

A panel further discussing AI is scheduled for Nov. 10 at 3pm, and Transforming Communities: A Movement to Racial Justice continues through Nov. 13. Coded Bias is currently available to watch on Netflix.

AI is no bueno